Blog

Technical deep-dives on AI, machine learning, and software engineering.

Technical deep-dives on AI, machine learning, and software engineering.

On July 2, 2019, a single line of code brought Cloudflare's global infrastructure to its knees, causing an 82% drop in traffic across their network of nearly 700,000 customers 1. Details of the Cloudflare Outage on July 2, 2019. The culprit wasn't a major architectural flaw or a complex system crash - it was an innocuous regular expression in their WAF ruleset that triggered catastrophic backtracking.

Two years later, in 2021, the npm tar package, used by millions of developers, was found to have a critical vulnerability where its path sanitization logic failed to handle repeated path roots.2. CVE-2021-32804 Two different scales, same fundamental pattern: code that passed all standard tests but harbored lurking edge cases that would eventually surface in production.

Every day, engineering teams face a similar challenge: code that works flawlessly for the common case but breaks in subtle, unexpected ways. Whether you're processing billions of requests through a WAF or sanitizing file paths in a utility function, the patterns of system failure remain remarkably consistent. Edge cases don't discriminate by scale, they merely wait for the right conditions to emerge.

What's particularly devastating about such system failures is their economics. Every hour a critical bug lives in production costs exponentially more than catching it in review: what starts as a simple code fix becomes a full-scale incident response, complete with customer escalations, emergency patches, and lost engineering time.

Yet while our systems have grown exponentially more complex, our approach to catching these failures hasn't fundamentally evolved. We still rely heavily on manual review and hope — hope that someone will spot potential issues, hope that our test cases are comprehensive enough, hope that production behavior matches our assumptions. There is a critical gap in engineering excellence that becomes more pronounced as systems scale and teams grow — one that's costing companies millions in incident response, lost productivity, and damaged customer trust.

This is not just about writing better tests or being more thorough in code review. It's about fundamentally rethinking how we approach the discovery of edge cases or potential failure modes in modern software development. The teams that will define the next decade of engineering excellence will be those that solve this challenge systematically, turning edge case discovery from an art dependent on individual expertise into a science powered by automation.

We've all done it. The pull request looks squeaky clean, tests are green, the happy path works in local. Ship it.

Alas, this is how subtle bugs sneak in. Not through messy code or missing documentation, but through untested edge cases — the kind that pass CI but fail mysteriously in production.

85% test coverage sounds impressive, but it usually means 100% coverage of obvious cases and 0% of interesting ones. An API endpoint for file uploads might handle standard PNGs perfectly, but fail silently on truncated files or concurrent requests. Your testing blind spots represent not just your future incidents, but gaps in system understanding. Coverage numbers hide these gaps, and teams optimize for a metric that doesn't capture what matters (also see: Goodhart’s Law).

Happy path engineering has a compounding cost. It starts subtly: engineers move slower around uncertain code. They add defensive checks and schedule additional reviews. Each edge case becomes a small tax on velocity.

Then it accelerates. A team shipping twice as fast as their peers suddenly finds themselves firefighting twice as often. They start patching symptoms instead of fixing causes, each change precariously balanced on previous workarounds. Engineers mutter “I'll fix it properly later" while juggling massive context in their heads. Their “lean" testing approach created a hidden debt, now coming due with interest.

Teams optimizing purely speed often become the slowest teams within months. Not because they write worse code, but because they don't trust their code. This is also why "vibe coding" really only works for greenfield projects, not mature codebases with complex nuances and years of battle scars.

We know we should test thoroughly, but often don't. Why? This gap isn't one of knowledge, but psychology.

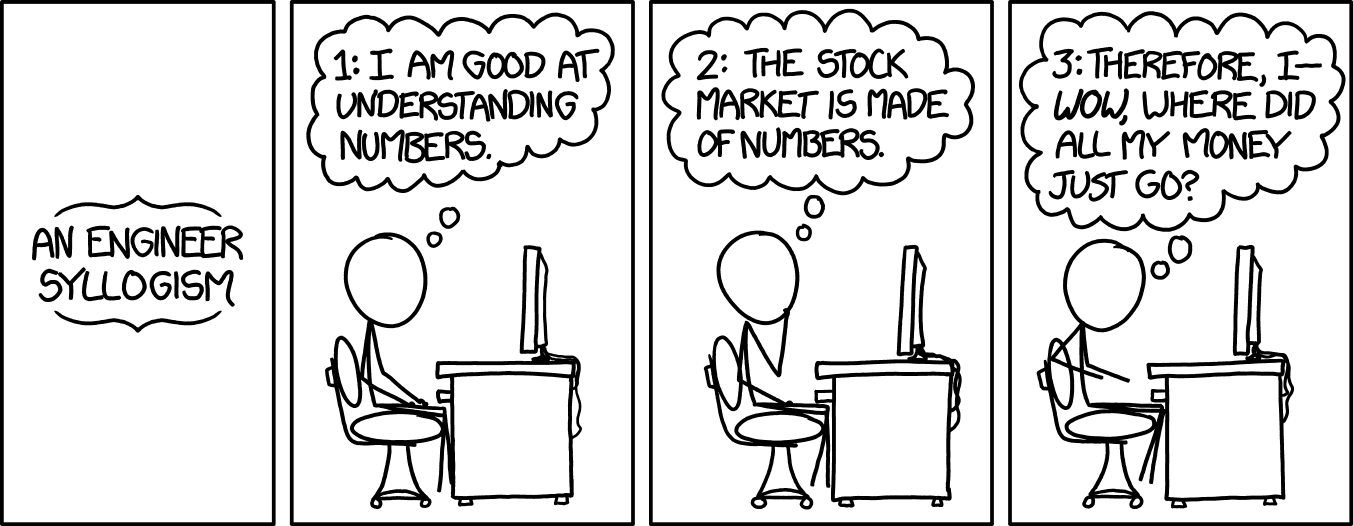

Humans are optimists when writing code. We visualize the happy path because that's what we're building for. The anticipation of corner cases, or hidden failure conditions, require a different mindset: systematic pessimism. This context switch is expensive, and this cost compounds with system complexity.

Consider the typical thought process when reviewing code:

Each pass demands full context. Each layer of depth multiplies cognitive load. No wonder engineers often stop at pass one.

The deeper problem here is anchoring bias. Once you understand how code works, that understanding becomes a lens that distorts everything else. Your brain automatically filters edge cases that don't fit your initial model. This happens to everyone, even experienced engineers who know to look for it. That's why your second and third passes through code find progressively fewer issues — not because the code is getting better, but because your mental model is getting more rigid.

Confidence fuels velocity, but overconfidence breeds bugs. Finding the balance is tricky.

| Too little confidence | Too much confidence |

|---|---|

|

|

|

Psychology gets harder at scale. As teams grow, system knowledge fragments across people and teams until no one holds the complete picture. Context, once shared casually across a lunch table becomes expensive to maintain and share. Assumptions that worked for a small team multiply silently across microservices and repositories. Edge cases that once affected a single service now cascade through dozens of interconnected systems, creating combinations no one predicted.

A two-person team can keep their entire system in their heads. A twenty-person team needs processes. A hundred-person team needs automation.

This isn't just about size. Conway's Law also works in reverse: system complexity shapes team psychology. The more distributed your system, the more distributed your thinking must become.

I believe that the best teams address this with systems, not just willpower. They build tools and processes making edge case testing natural, not heroic, and deriving confidence from systematic understanding, not just familiarity.

Edge cases tell better stories than happy paths. They reveal how systems actually behave, not how we wish they behaved. Every unexpected failure teaches us something fundamental about our system's resilience.

Great engineering teams understand this instinctively. They treat edge cases not as annoyances, but as signals. Each type reveals something different:

The deeper you look, the more chances for failure emerge. Performance degradation under load. Race conditions in concurrent operations. Security vulnerabilities from injection attacks. Data privacy leaks. Each category reveals different aspects of system behavior, each demanding its own approach to detection and prevention.

Modern systems face all these challenges simultaneously. A payment service doesn’t just handle numerical edge cases, it has to do so securely, at scale, with zero data leaks, while gracefully managing third-party outages. This combinatorial explosion of possible failure modes defines modern software complexity.

But teams that embrace a sense of systematic pessimism gain compound advantages: they (a) build better mental models by thinking deeply about system behavior, which compounds into better architectural decisions, (b) catch problems earlier by spotting potential issues during code reviews instead of incidents, and (c) writing more resilient code, especially those that make possible failures obvious. This subtle shift in approach pays dividends as the system scales.

Traditional testing starts with happy paths and works outward. This made sense when systems were simpler. It doesn't scale.

Modern systems need a different mindset: failure discovery as a first-class process. This doesn't mean engineers must exhaustively imagine every edge case or failure scenario before writing a line of code. Rather, it means building failure discovery—whether human or AI-driven—into your development workflow.

The approach is practical and lightweight:

This is transformative for engineering velocity. When edge case discovery becomes systematic,

The tooling landscape is evolving to support this workflow. AI can now identify edge cases that humans might miss, while requiring minimal additional effort from engineers. Static analysis can verify boundary conditions. Property-based testing can explore edge cases systematically.

We can't uncover and test every edge case, nor should we attempt to. But we can be systematic about exploration and prioritization:

This approach resolves an age-old tension: being thorough without being paranoid. Engineers can focus on building features while automated systems handle the combinatorial explosion of edge cases. You get the benefits of defensive programming without the productivity tax.

The current discourse around AI and software development largely misses the point. The interesting question isn't whether AI will replace engineers—it's how AI changes the economics of engineering thoroughness.

Engineers are excellent at spotting patterns that matter, but terrible at exhaustive exploration. Give an engineer an API endpoint to review, and they'll immediately identify critical edge cases based on experience. But they won't (and can’t!) systematically consider every combination of inputs, timing conditions, and system states. The human mind naturally optimizes for insight over completeness.

AI inverts this equation. It lacks an engineer's intuition for which edge cases matter most, but excels at methodical exploration of possibility spaces. It can discover edge cases that experienced engineers miss not because it's smarter, but because it's willing to explore paths that humans would dismiss as uninteresting or unlikely. Consider race conditions: humans think about the obvious ones, AI finds the obscure ones that only happen during leap years when a cache expires.

This complementarity is powerful. Engineers can focus on judging which edge cases matter — the part humans do best — while AI handles exhaustive exploration. It's like using a SQL query versus manually combing through database records – the query isn't smarter, just infinitely more comprehensive and tireless.

This shift fundamentally changes the cost-benefit equation of thorough testing. Traditional testing faces diminishing returns: each additional test case requires human effort to conceive, write, and maintain. Teams make rational tradeoffs, testing the most likely scenarios and accepting risk for edge cases.

AI-assisted testing breaks this tradeoff, and the marginal cost of considering another edge case approaches zero. Engineers can focus their finite mental energy on judging which edge cases matter, rather than trying to imagine all possible cases. This isn't about simply replacing test writing, it's about expanding what's practical to test. When exploring edge cases becomes nearly free, teams can achieve levels of thoroughness that would be economically impossible with pure human effort.

The real impact emerges when AI becomes part of the development feedback loop. You can turn your test suite from simply a static safety net into an intelligent exploration system embedded into your existing CI/CD pipeline, constantly discovering new vulnerabilities as the codebase evolves.

It is worth emphasizing that these capabilities don't diminish the role of human judgment; instead, they enhance it. Engineers still need to:

AI simply makes it practical to be more thorough in executing these human decisions. The future of software quality doesn’t replace human judgment; instead, it’s about giving that judgment the scope and scale it deserves. Great engineers have always had an intuition for where systems break, now we can validate that intuition continuously and extensively.

How do we circumvent the limitations of human psychology as we saw earlier? Let's start by making pessimism systematic. Let's create CI pipelines don't just check if tests pass — they actively search for edge cases and potential failure modes. Engineers remain thoughtful but don't burn cognitive cycles trying to imagine everything; the tools surface potential issues automatically.

The result looks deceptively simple: Engineers write code and basic tests. Automated systems explore failure scenarios and generate tests. CI runs everything. Engineers review results and make informed decisions. The system learns from these decisions, and each cycle makes the next one better.

Software systems have traditionally faced a brutal tradeoff: either invest engineering hours exponentially as you scale, or watch quality deteriorate. Add a service, multiply your edge cases. Add an API, multiply your failure modes. Every new integration increases your testing surface faster than your ability to cover it, and manual testing simply can't keep up with this combinatorial explosion.

But automated failure discovery fundamentally changes this equation. When machines systematically explore interaction patterns, the cost of finding edge cases decreases dramatically. Yes, you still need engineers to judge which edge cases matter. But you're no longer asking them to imagine every possible failure condition of a distributed system. Moreover, it will be much easier in this paradigm to gradually build up a suite of meaningful tests that cover these new possible failure scenarios as you add functionality.

The next generation of engineering teams won't distinguish between writing code and ensuring its quality. Just as we now take for granted that every commit runs through CI, they'll take for granted that every change is automatically explored for edge cases. This is already happening in pockets across the industry – teams are building these capabilities into their development infrastructure, treating systematic testing as fundamental as version control.

Again, the most profound change isn't actually technical, it's cultural. When teams have confidence in their ability to catch edge cases systematically, they design more ambitious systems, make bolder architectural changes, and focus more on innovation than risk management. Engineering leaders who understand this shift aren't just adopting new tools. They're reshaping how their teams think about quality, velocity, and risk. They recognize that the choice between quality and speed is a false dichotomy — systematic failure discovery makes such tradeoffs unnecessary.

I believe the next evolution in software quality is here. Teams that embrace systematic failure detection won't just ship more reliable code - they'll ship faster, with more confidence, and spend less time fighting fires. They'll attract and retain better talent because engineers want to work on teams where they can build with confidence.

Start small. Pick one critical service — the one that keeps you up at night. Implement automated failure discovery and testing. Watch how it changes not just your test coverage, but your team's confidence and creativity. Then expand. The future of engineering excellence isn't about choosing between quality and velocity. It's about building systems that make such a choice unnecessary. The tools exist. These patterns are known.

Let's engineer tomorrow's reliability, today.